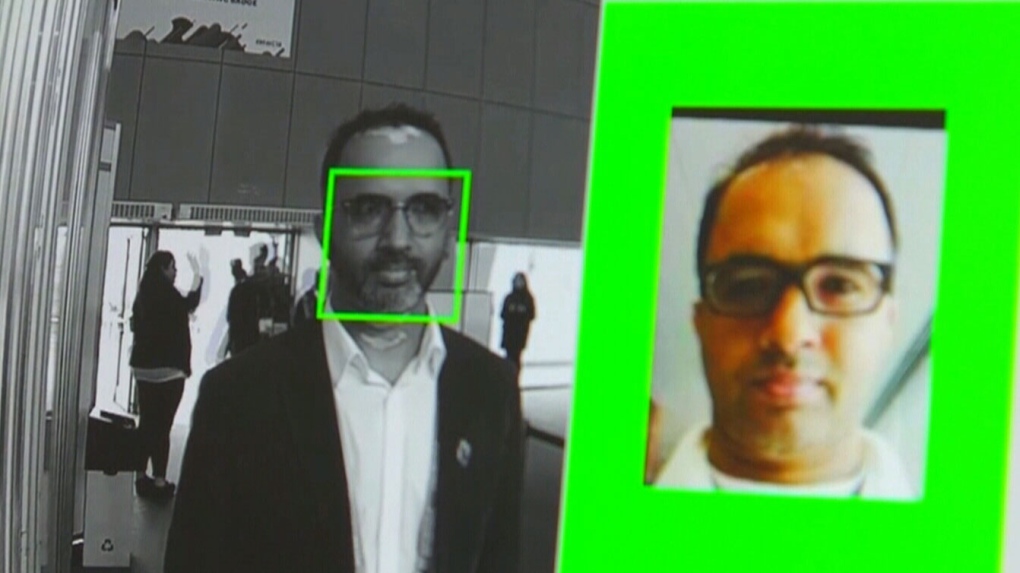

LONDON, ONT. -- Several London police officers ‘played around’ with controversial facial recognition software after being provided free access by the vendor. One used it in an investigation.

Clearview AI is artificial intelligence software that combs through a database of images collected from websites and social media with facial recognition technology to identify individuals.

It’s use by police has raised serious privacy concerns across Canada.

Police Chief Steve Williams provided details to the London Police Services Board after a review of the use of Clearview AI by some of his officers.

“It was marketed to our officers as a trial, and they were provided free online access with a login code.”

According to Williams, several officers were introduced to the software at a seminar in Nov. 2019.

“Seven of our officers accessed the software on multiple occasions and tested, or for lack of a better term, played around with the application using photos of themselves or public figures.”

One officer used the facial recognition software in the course of a voyeurism investigation, but it failed to identify a suspect recorded on a security camera.

“I do maintain that the officer was well intentioned in that case, however, I completely appreciate that there are privacy implications,” says Williams.

In February, the chief ordered his officers to discontinue use of the Clearview AI program.

A subsequent investigation by IT staff revealed no further use.

The police board was told that that London police have subsequently developed a service procedure that guides implementation of all new technology.

It will be screened for impacts on privacy, based on guidelines established by the privacy commissioner, before being authorized for use.